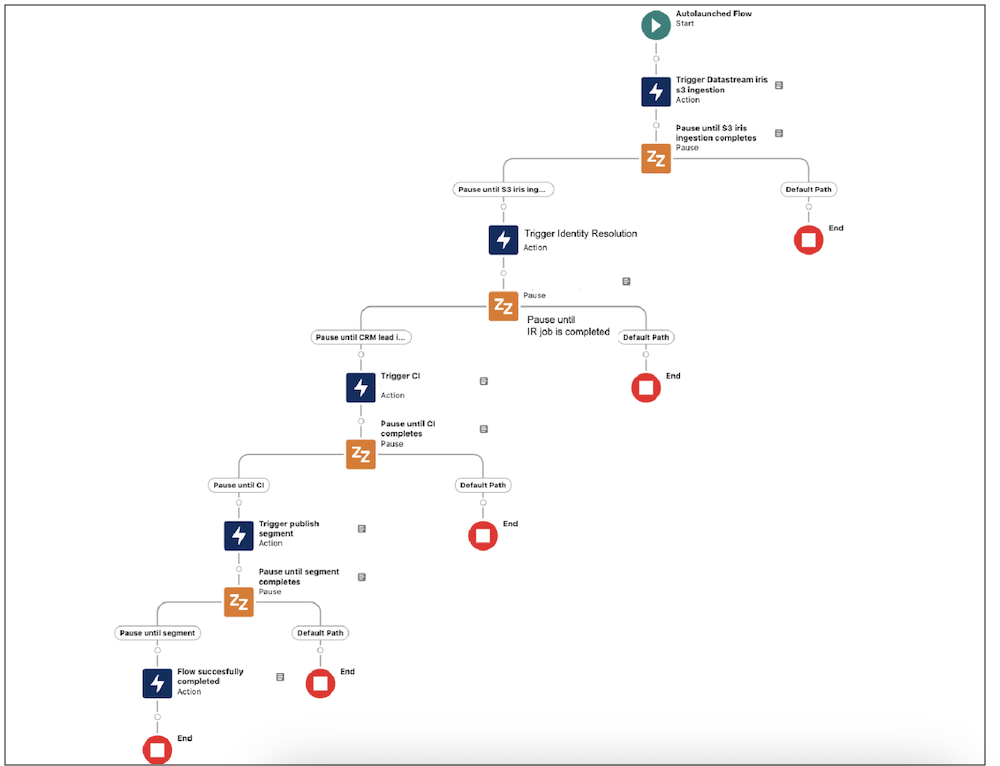

Big news for the Salesforce Admin community: The power of Flow Orchestration is officially at your fingertips. Previously a paid add-on, Orchestration is now a standard Flow type included for all Salesforce customers (subject to edition limits for Flow). For admins, this is more than just a product update. Flow Orchestration is a major toolkit […]