Are you a Salesforce Admin who wants to streamline and optimize key Data Cloud processes such as ingestion, identity resolution, calculated insights (CIs), segments, and activation? If so, you’ve come to the right place. In this blog post, we’ll explore how you can use Salesforce Data Cloud and Flow together to create custom workflows to meet your organization’s unique needs. The post also covers the do’s and don’ts of configuring workflows for Data Cloud. By chaining together Data Cloud actions and defining trigger conditions based on your business requirements, you can take advantage of the powerful capabilities of Flow and optimize your customer Data Cloud processes.

With the Winter ’23 Release, the following Data Cloud actions are available in autolaunched flows.

- Refresh DataStream (Salesforce CRM and Amazon S3 ingestion)

- Run Identity Resolution

- Publish Calculated Insights

- Publish Segments and Activate

As Data Cloud continues to evolve, more actions will be available for use with Flow and other platform capabilities. For now, keep reading for an example of how you can use Flow to orchestrate Data Cloud workflow processes.

Use case: Automating a workflow

Your organization is looking to compensate customers who are affected by a technical failure. It wants to deposit 500 rewards points to each impacted customer’s account and send an apology email letting them know about the reward.

To do this, your organization wants to kick off CIs once new data is ingested and the identity resolution job is run. Publishing CIs calculates the impact, duration of the failure, membership ID, and so on. Once CI is completed, you can materialize segments to query newly refreshed data and then kick off activation. At the end, an apology email will be sent, letting the customers know about the reward.

The above workflow needs to be automated to ensure the customers are compensated every time there’s a technical failure.

Here are the key platform features you can use to set this up in Flow.

- Platform events

- Record-triggered flows

- Autolaunched flows (which is your main workflow)

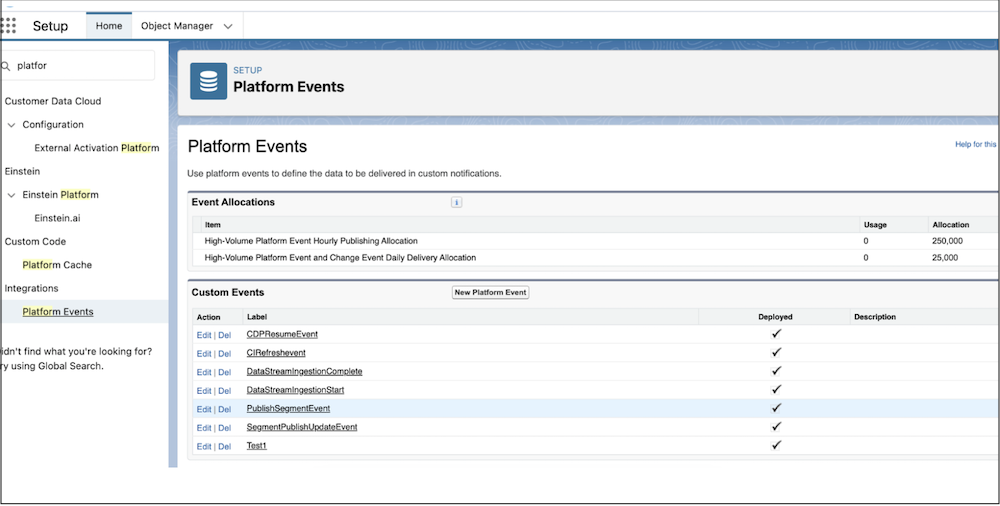

Create platform event(s)

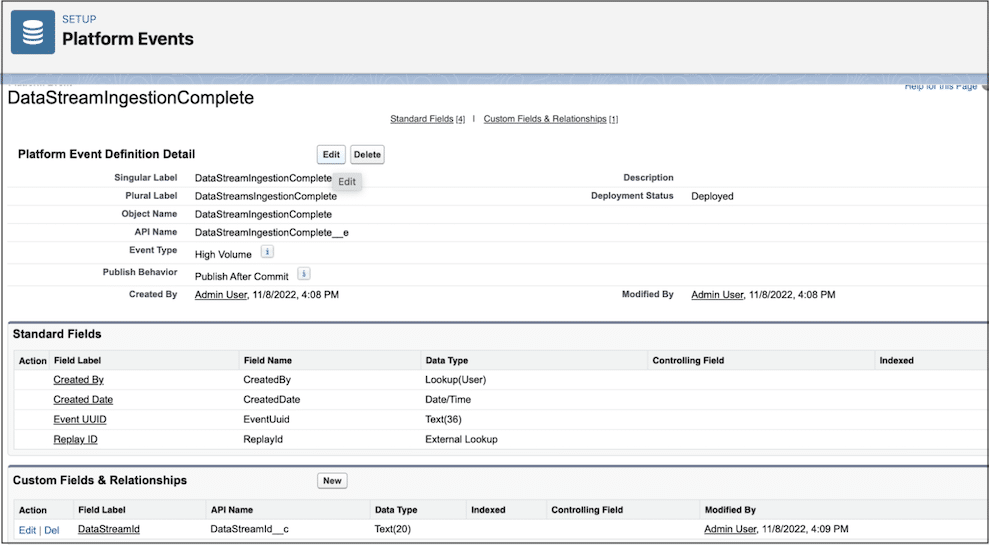

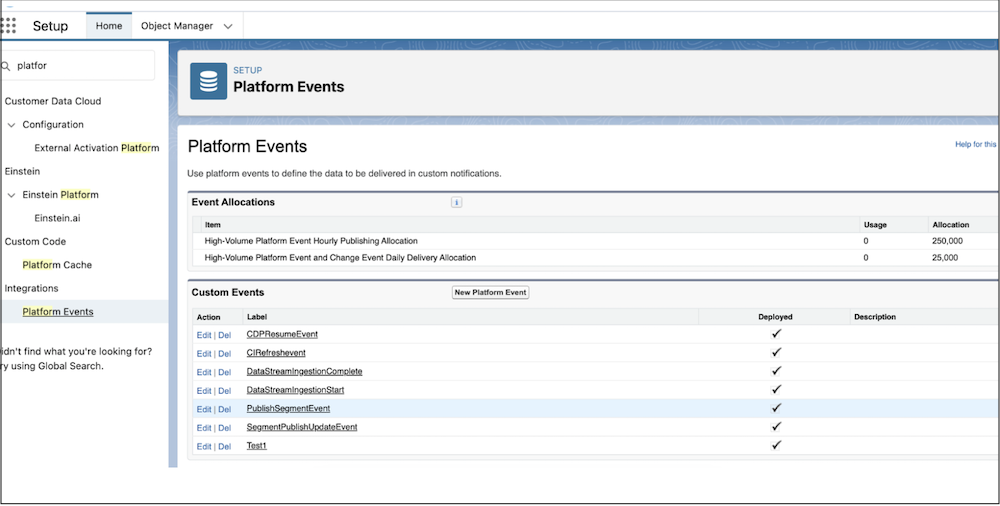

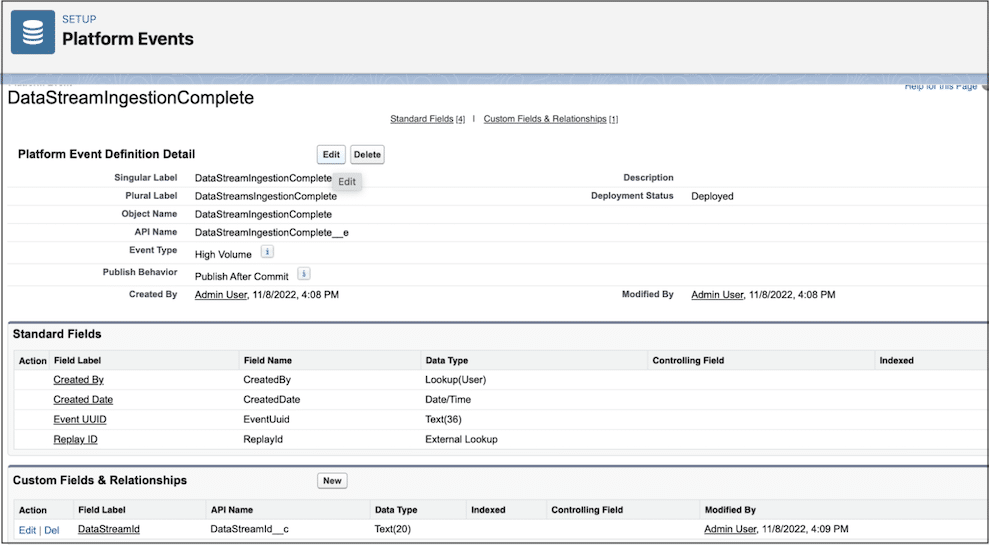

Create a custom platform event by going to Setup > Platform Events. You need to create a platform event for each action that you intend to use in your main autolaunched flow. For our use case, one event needs to be created for each of the below actions.

- Refresh DataStream

- Run Identity Resolution

- Publish Calculated Insights

- Publish Segment

As you create a custom platform event for each action, you also need to create at least one field for each action that’s populated based on the record-triggered flow (which is the next step after creating platform events). For example, if you create an event for “Refresh DataStream”, you can add a field named Data Stream ID. For CIs, you can add a field named Calculated Insight Name.

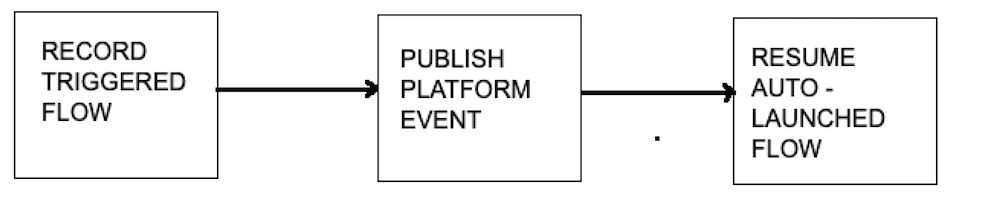

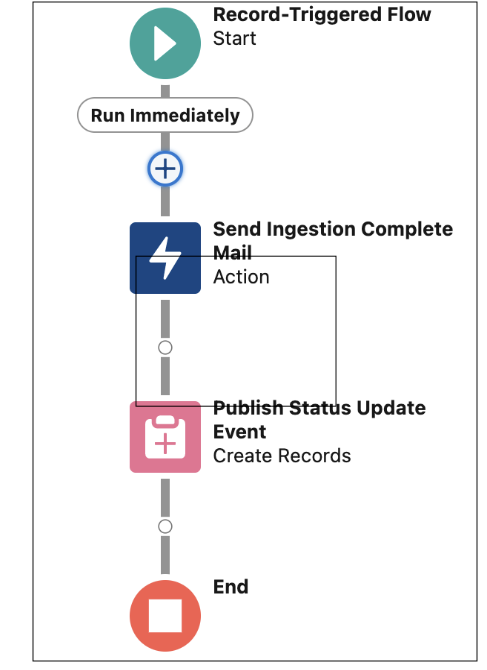

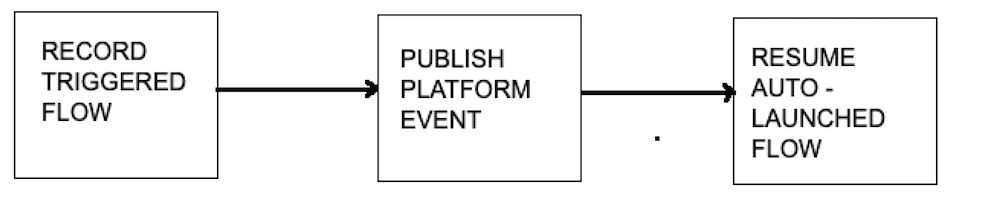

The next step is to create a record-triggered flow. For our use case, note that it’s the record-triggered flow that publishes the platform event (that is, populates the field in the platform event). Only after publishing the platform event does the autolaunched flow (described later in this blog) resume.

Create record-triggered flow(s)

You must create a record-triggered flow in order to publish the platform event you created in the above step. It can then be used to resume the execution of your paused main autolaunched flow, which we will get to later.

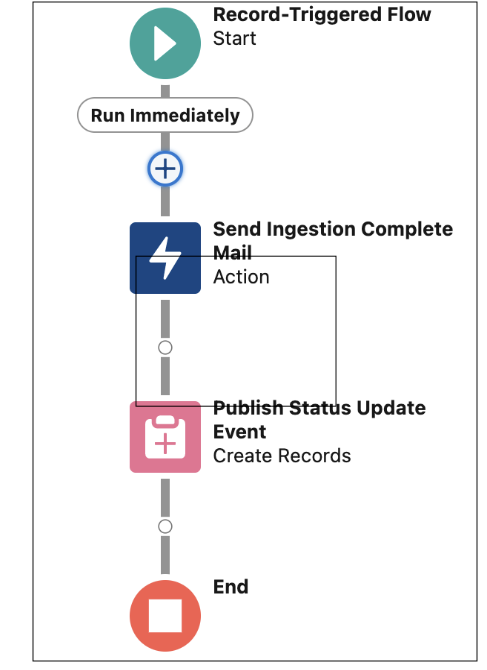

To create a record-triggered flow:

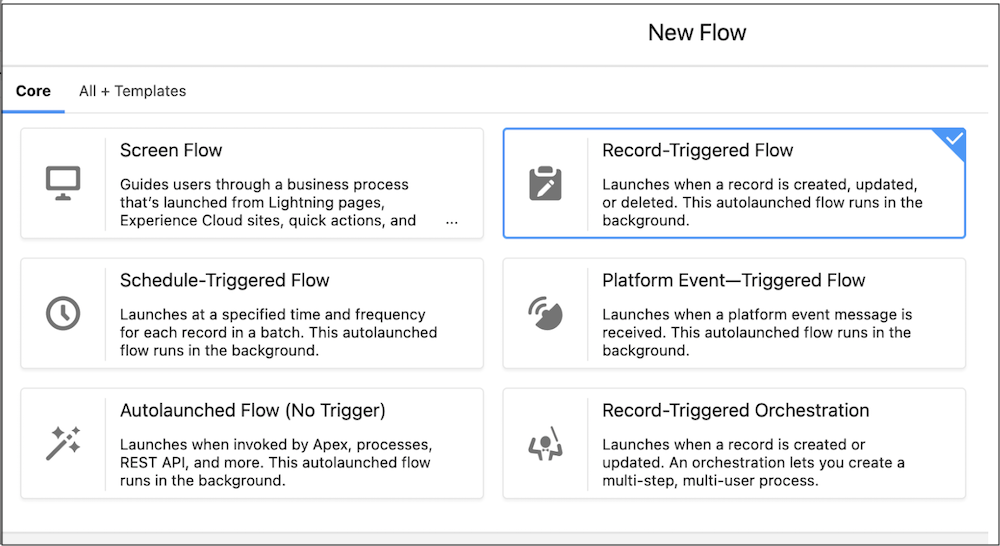

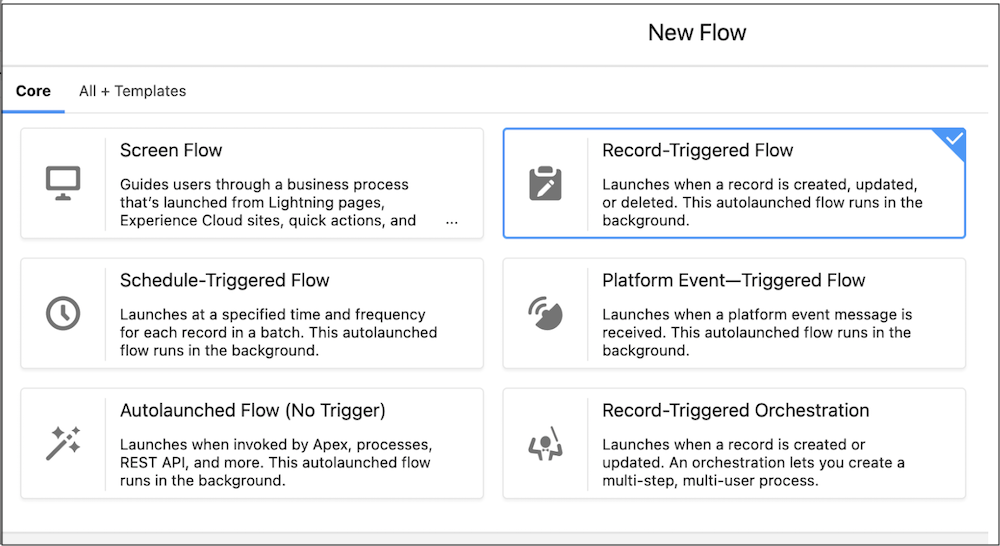

1. Go to Setup > Flows > New Flow > Record-Triggered Flow.

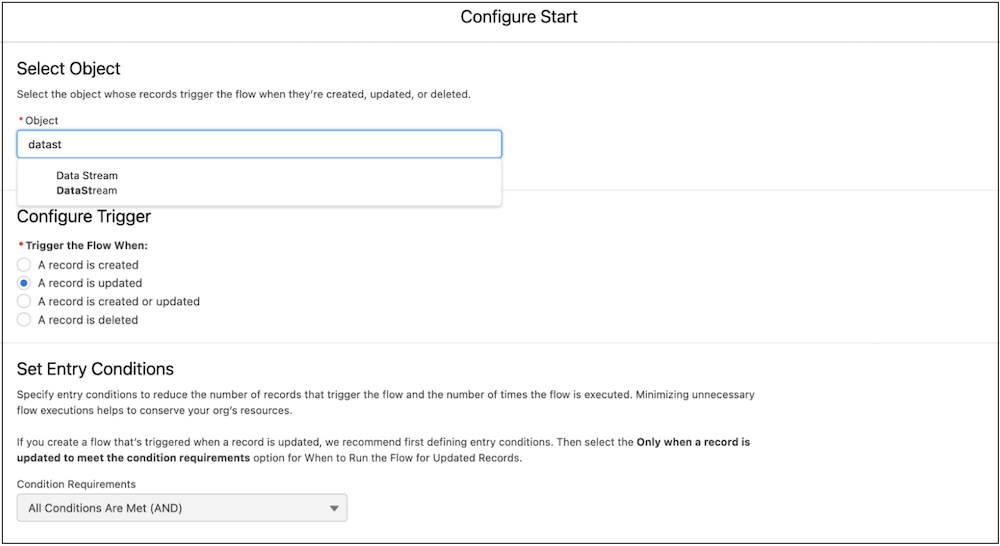

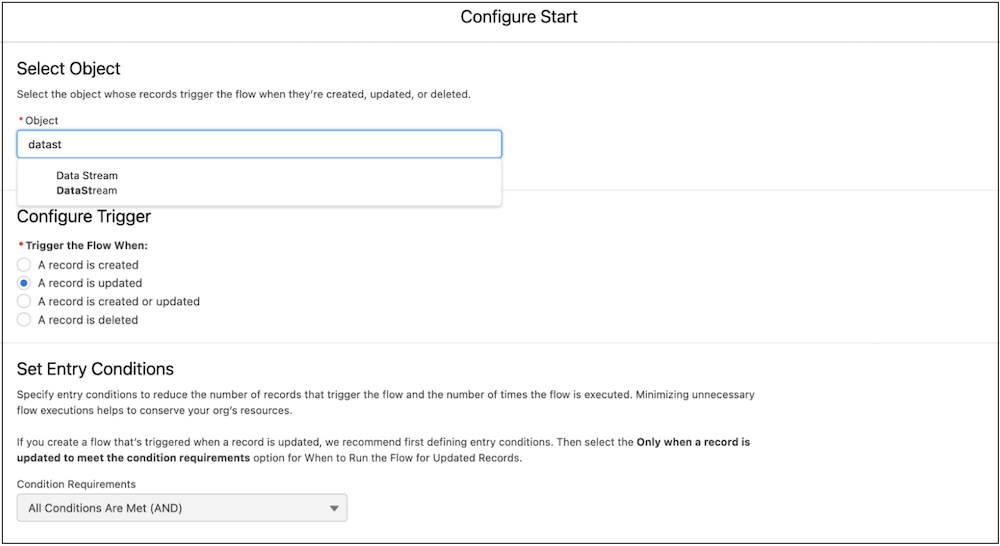

2. Configure the Start step of the record-triggered flow by choosing the appropriate object. For example, for Ingestion, select the DataStream object. For Calculated Insight, select the Calculated Insight object, etc.

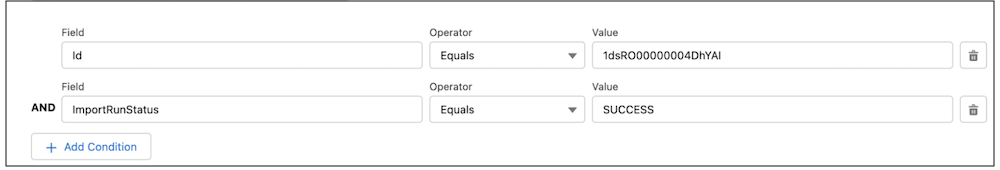

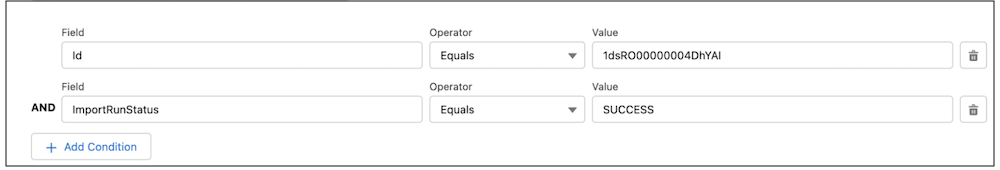

3. For our example use case, configure the trigger when a record is updated (as shown in the image above). Also, select the conditions (as shown in the image below) with the appropriate DataStream ID.

This will ensure that the flow gets triggered whenever the DataStream import run status is changed to “Success” for the specified DataStream ID.

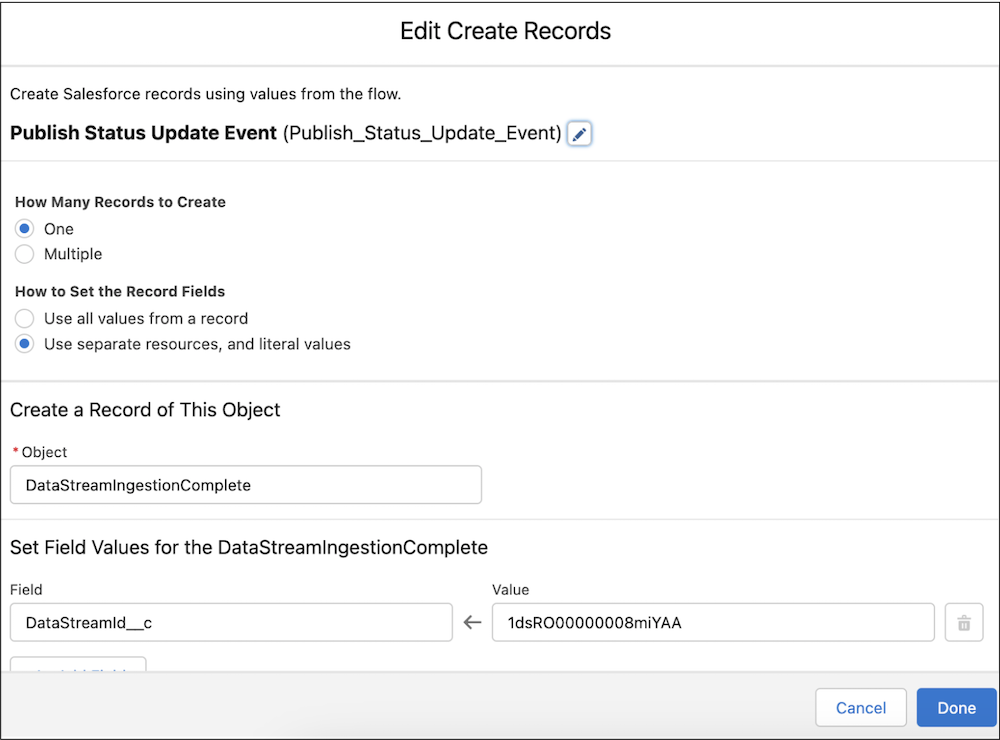

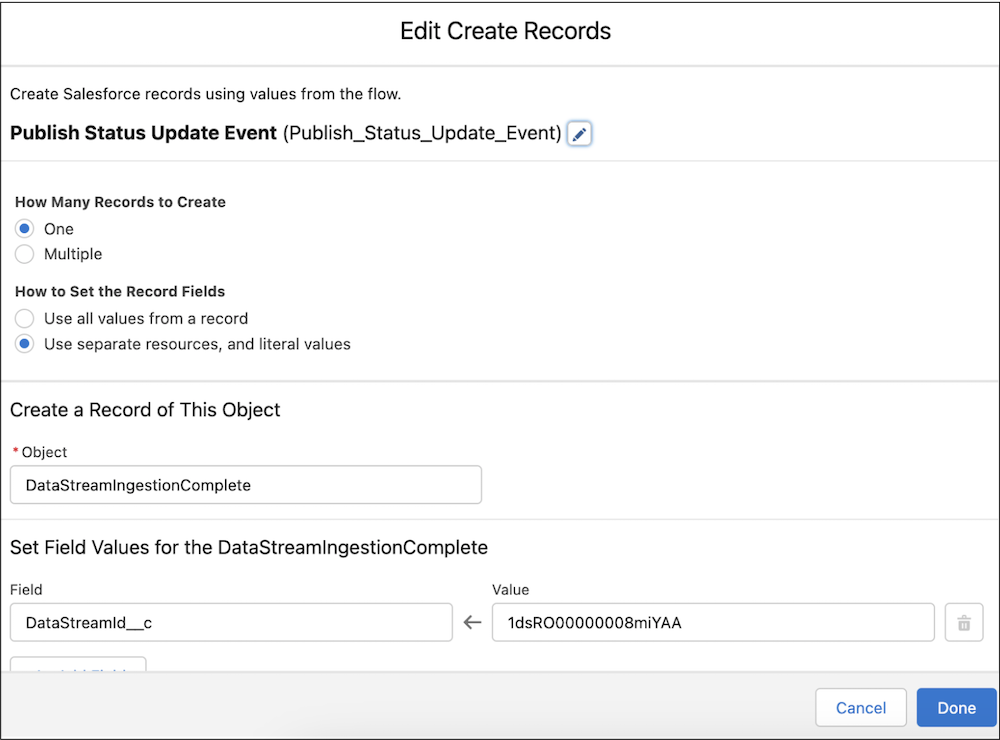

One of the steps in this record-triggered flow is to populate the respective platform event. For example, if you’re creating a record-triggered flow for “Refresh DataStream”, create a record in the platform event pertaining to “data ingestion” and set the field to the DataStream ID.

Similarly, create record-triggered flows for all four processes:

- Refresh DataStream

- Publish Calculated Insights

- Run Identity Resolution

- Publish Segment

Once you’ve created your record-triggered flows, the next step is to create your main autolaunched flow.

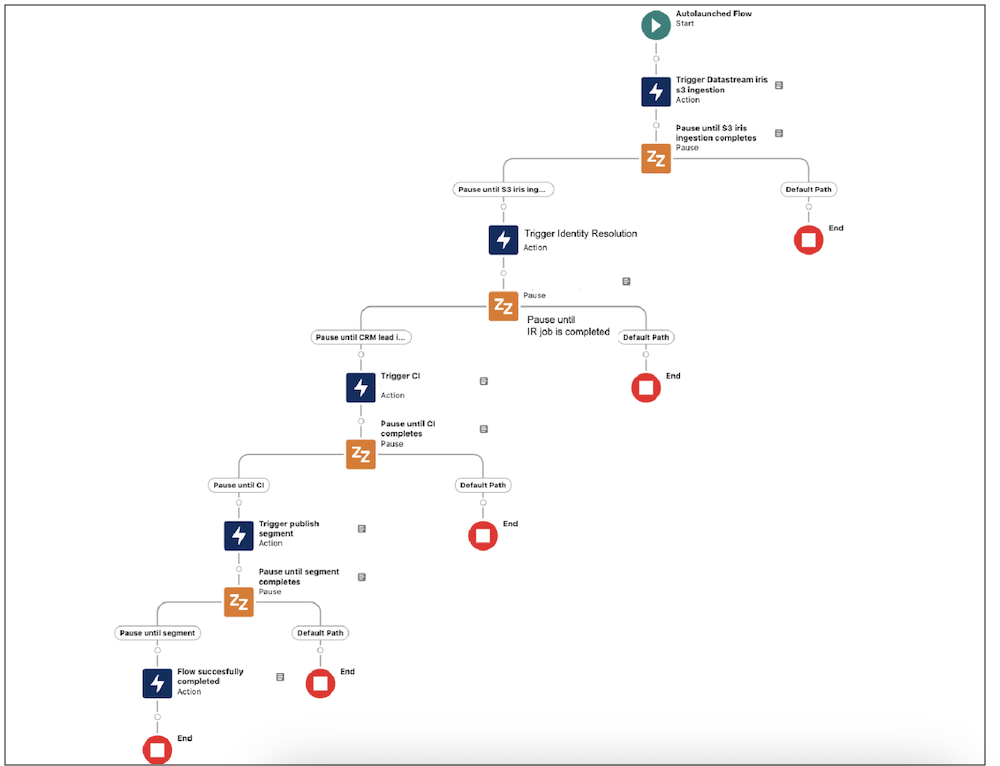

Create autolaunched flow

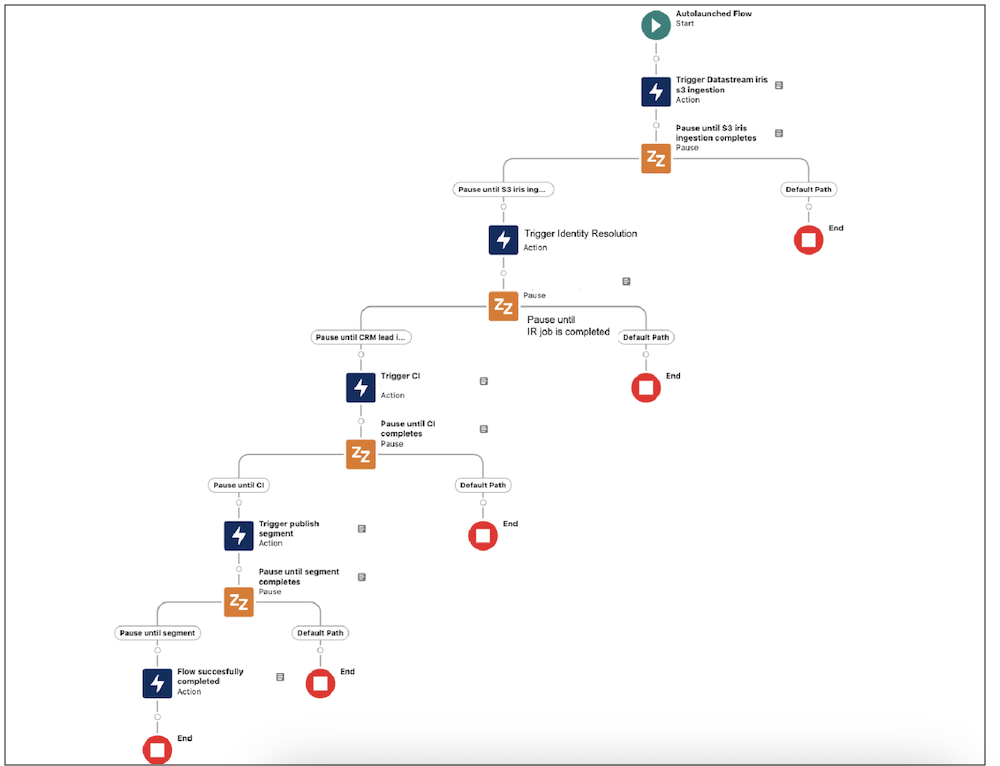

This is your main flow that defines an end-to-end workflow for the business use case.

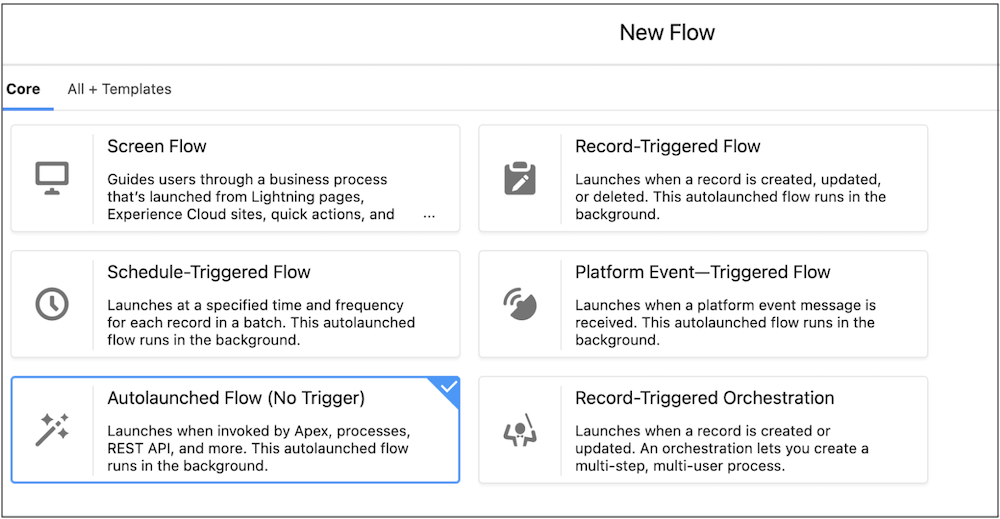

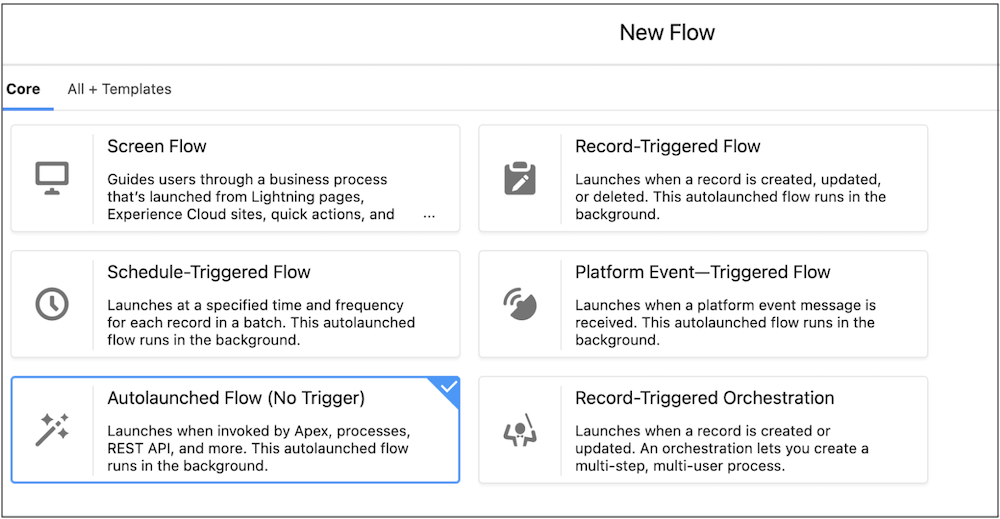

1. Go to SetUp > Flows > New Flow > Autolaunched Flow (No Trigger).

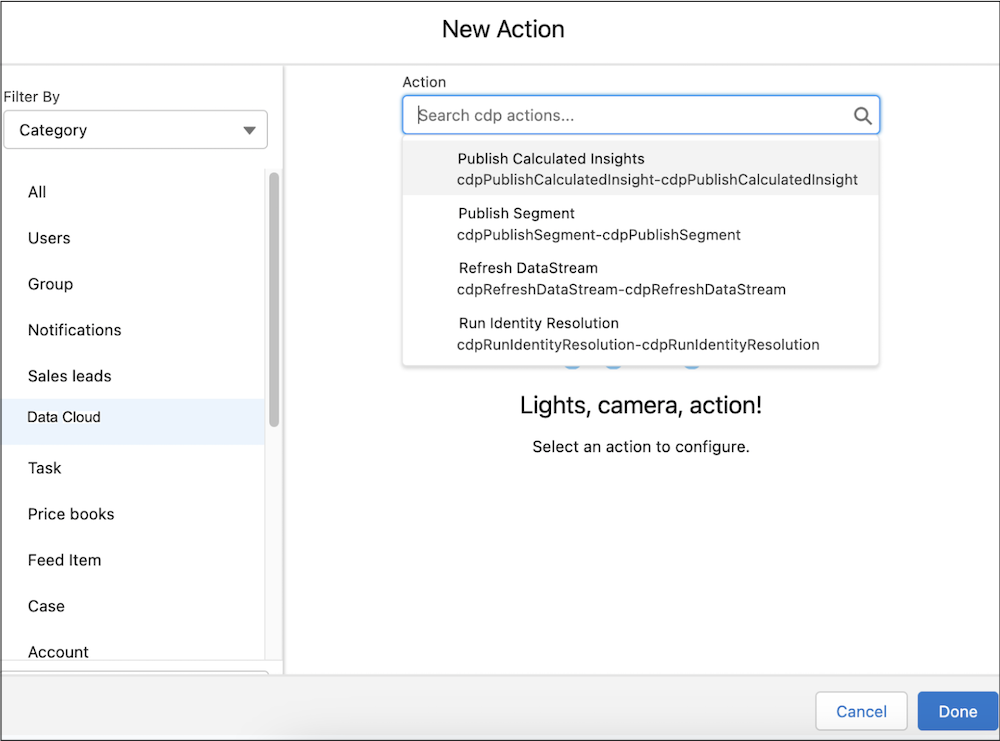

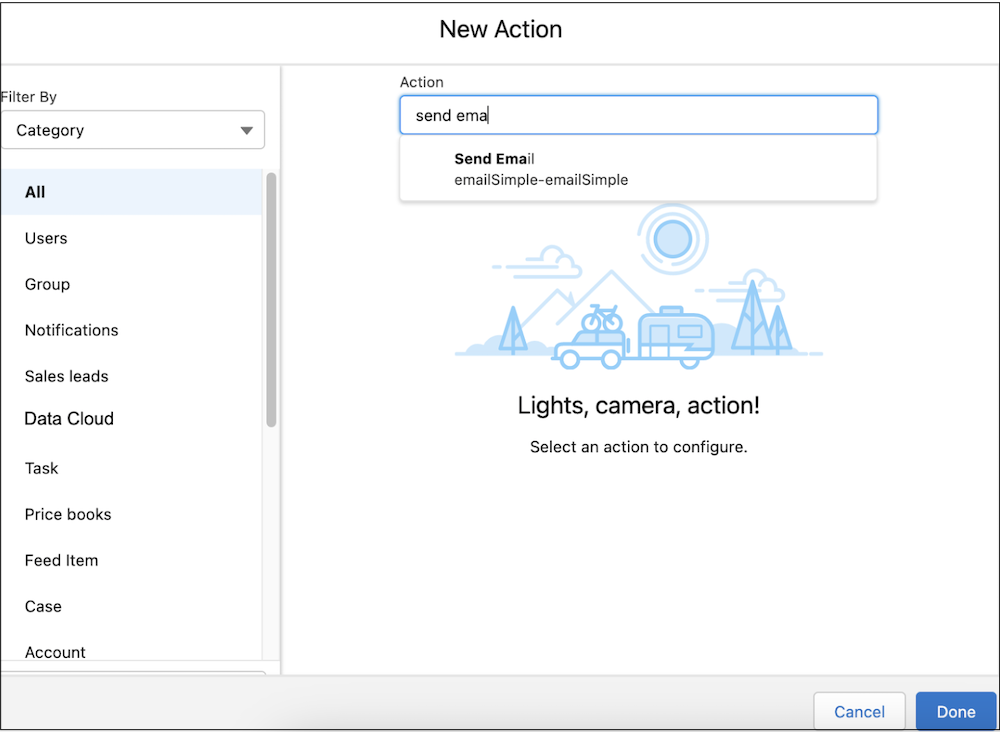

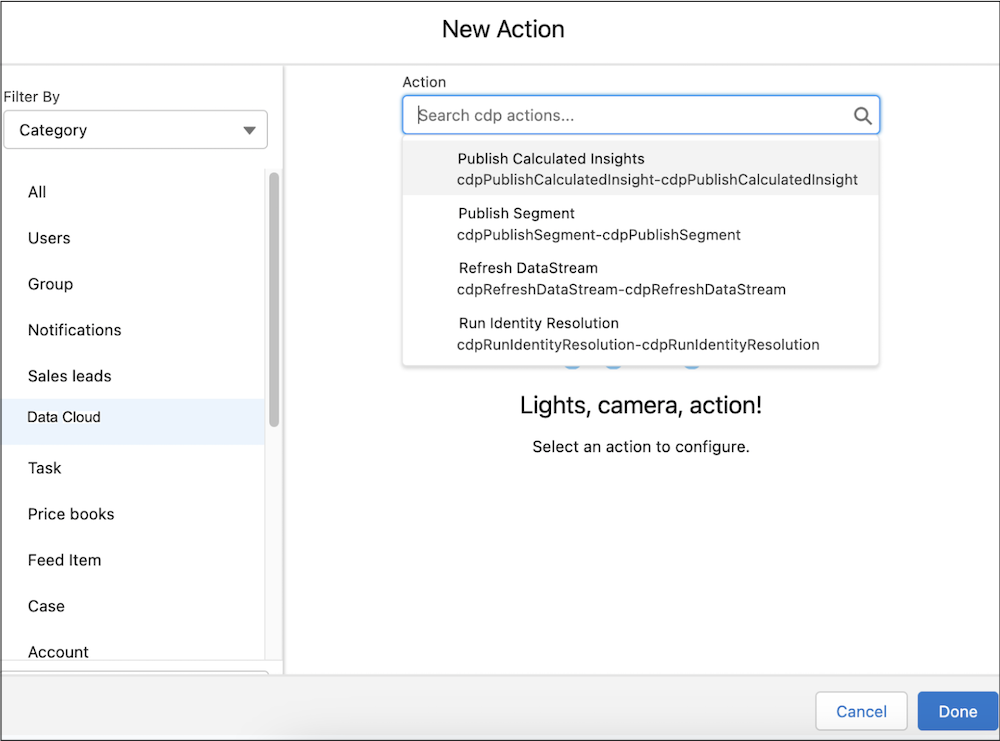

2. On the canvas, click Add Element and then Action. In the left pane, click Data Cloud, and then select a Data Cloud action. Enter the label, API name, and input values, and click Done.

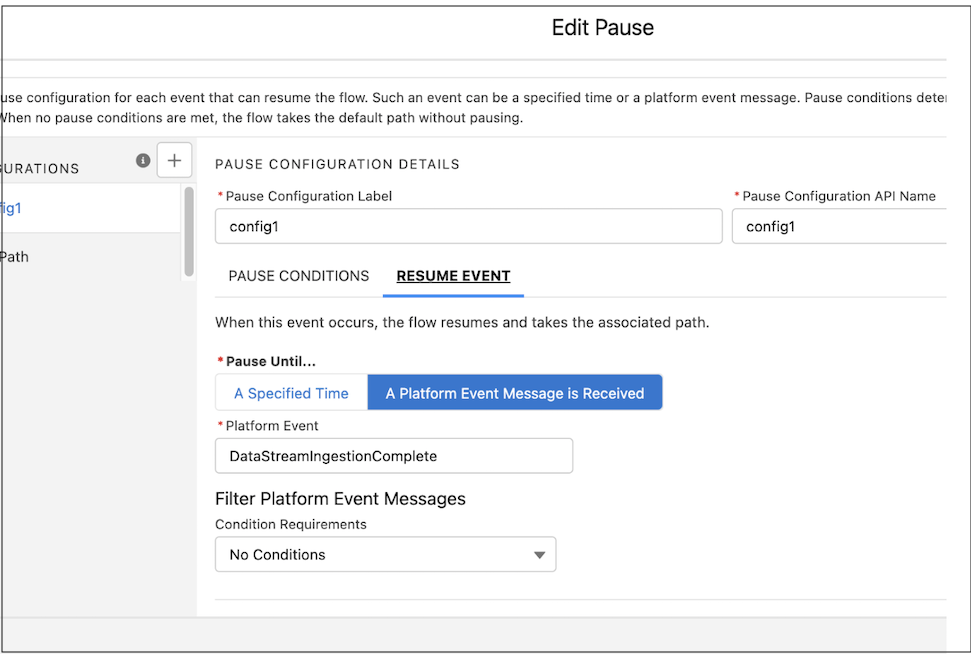

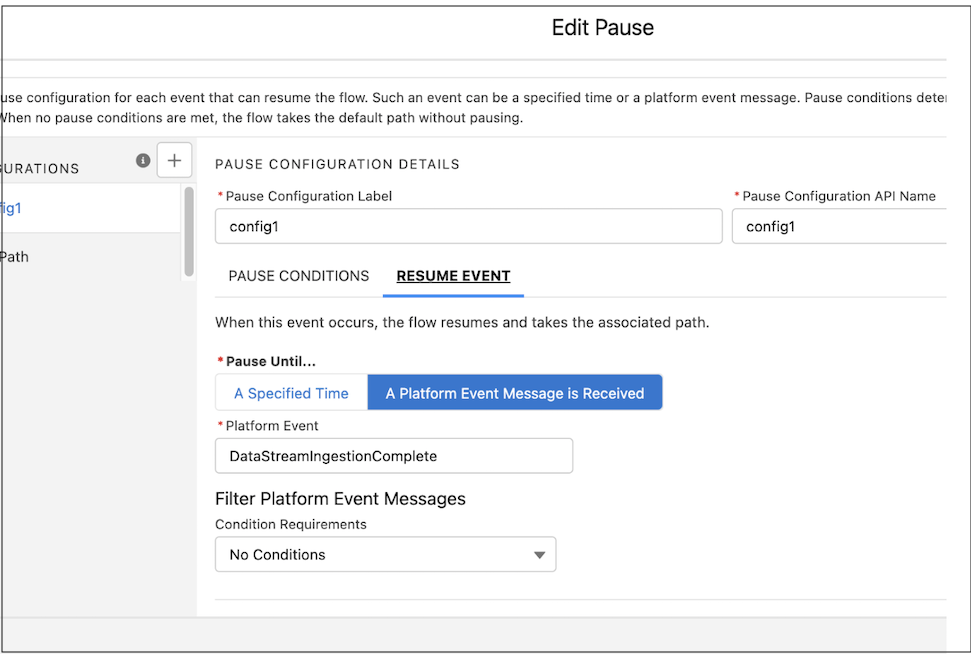

3. Select the Refresh DataStream action and enter the DataStream ID as the input field when prompted. Then, create a Pause step for the workflow to pause until data ingestion is complete and the platform event for data ingestion is published.

4. Resume the workflow only when the platform event is published.

5. Repeat steps 1 through 4 for the “Identity Resolution”, “Calculated Insights”, and “Publish Segments” actions. Once you’ve completed the steps, your main autolaunched flow appears.

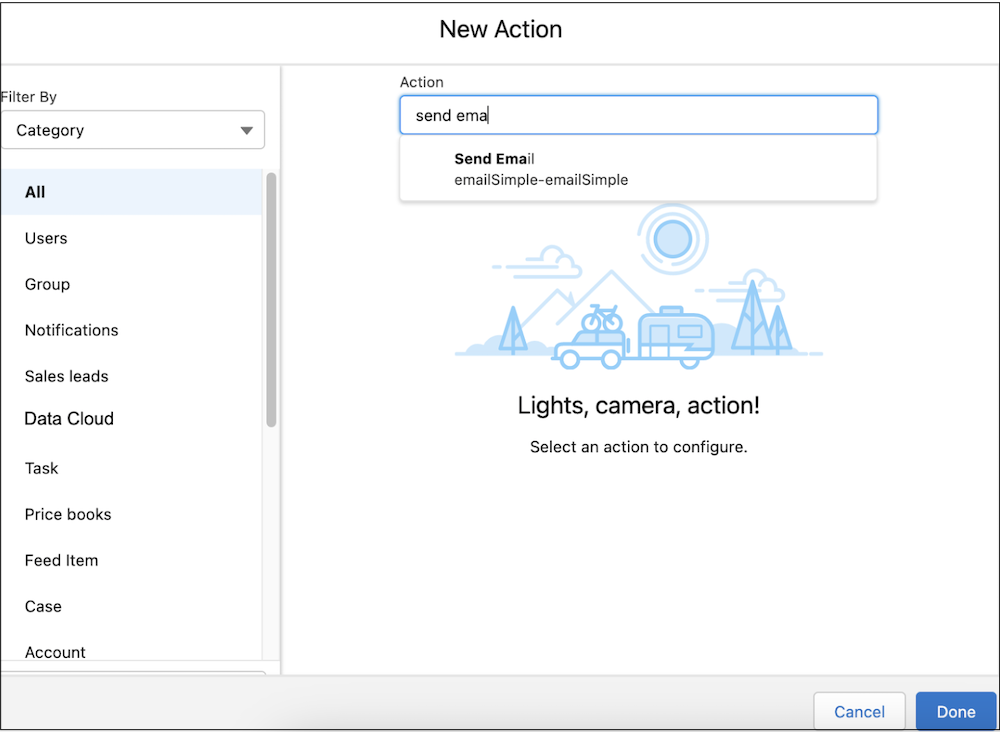

6. At the end, configure the “Send Email” action as the last step to send an apology email to the impacted customers.

7. When your autolaunched flow is ready, you can run it manually from the UI by clicking Run. You can also trigger it via an API. Or, you can use this autolaunched flow as a subflow within the record-triggered flow to make it completely automated.

Explore the combined power of Data Cloud and Flow

This use case is just a simple example. Flow is a powerful tool that allows you to build complex, enterprise-scale automation into your workflows with automated triggers, reusable building blocks, and prebuilt solutions. You can use it for a plethora of different use cases inside and outside of Data Cloud. For another example detailing how you can use Flow to proactively notify team members of any critical status changes in your Data Cloud implementation, check out the Medium blog linked in the resources below.

Resources