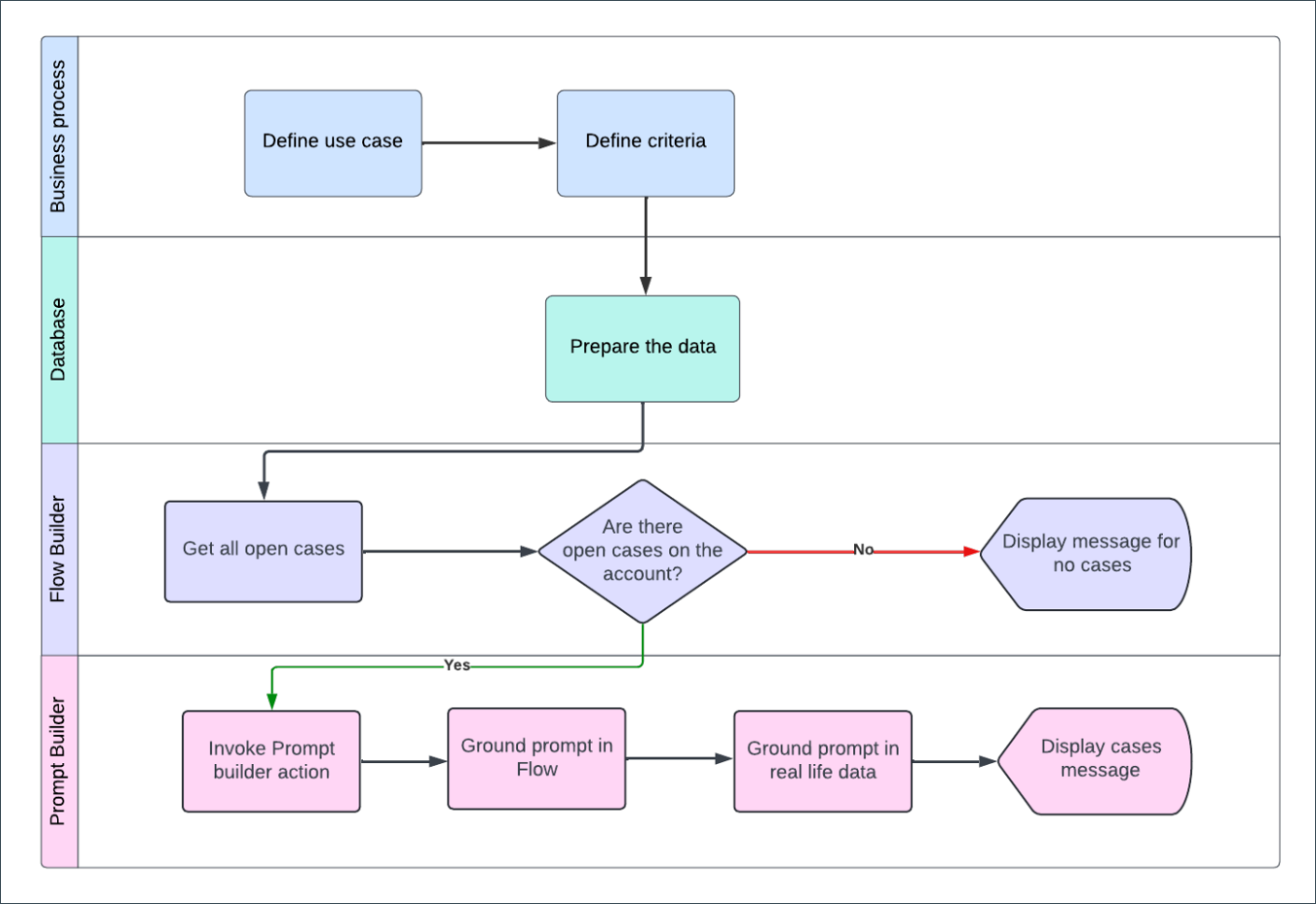

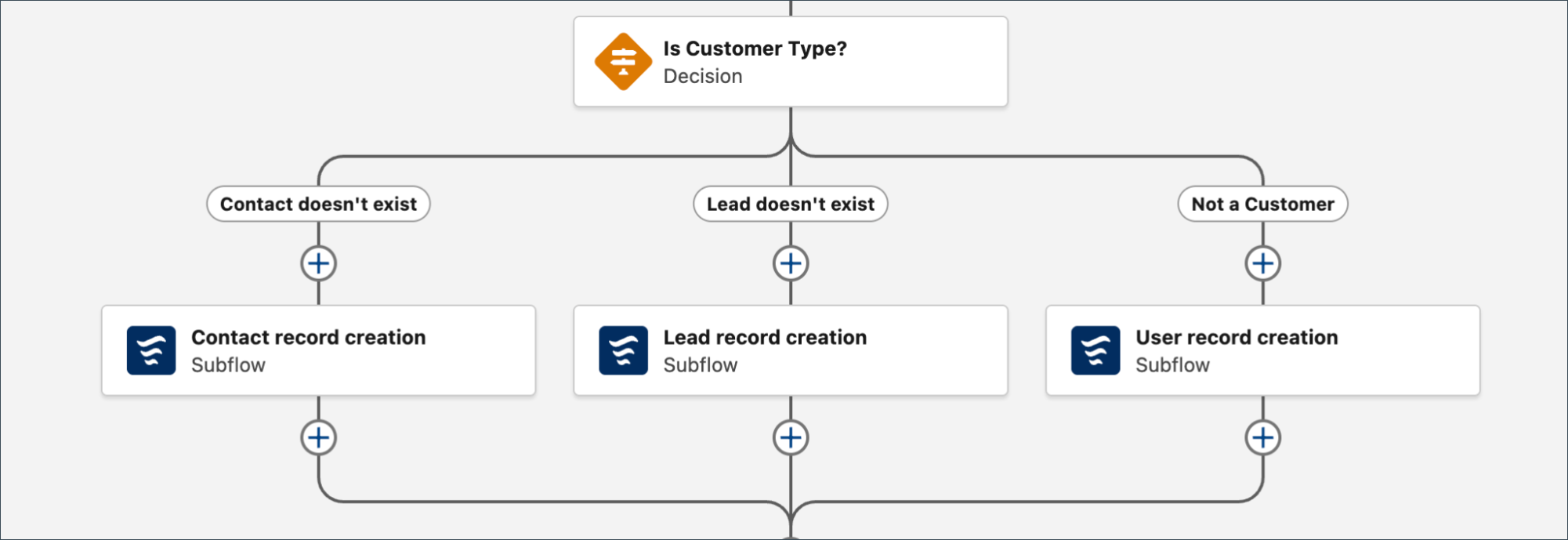

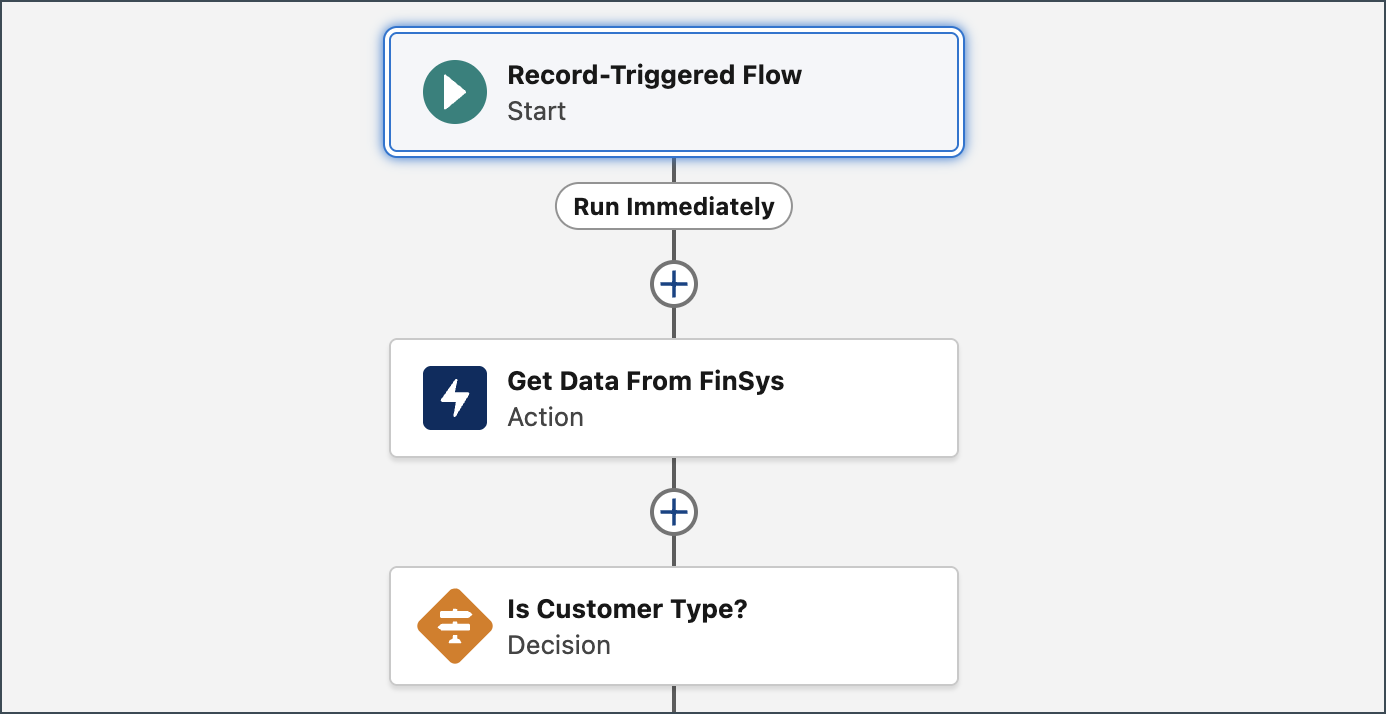

Welcome to “How I Solved It.” In this series, we do a deep dive into a specific business problem and share how one Awesome Admin chose to solve it. This episode has a twist—the admin is me! Are you tired of manually reading customer emails or messages and copying that data into Salesforce? In this […]